Policy-as-Code for Docker and Kubernetes with Conftest or Gatekeeper

May 12th, 2022

Andrew Poland

Engineering |

DevOps

In this post, we'll explore how Aserto uses Policy-as-code to centrally define our important engineering best practices and share them amongst a diverse set of teams and microservices.

Authorization and Access Management for microservices

Our organization is similar to many nowadays in that containers and microservices are an essential foundation of our architecture, and Kubernetes is the operating environment that orchestrates everything we operate. However, in order to keep deployments secure and reliable, there are a lot of necessary details that need to be applied to each service consistently and these are often not enforced by default in Docker or Kubernetes. As we developed our reliability and security programs at Aserto we looked for a way to ensure that security best practices for containers are followed and consistently implemented across each of our services.

As an authorization platform, Aserto is a latency sensitive tool that needs to deploy close to the applications that depend on it. Thus one of our requirements is to be able to roll out our stack to any environment that a customer is using, including multiple cloud vendors, regions, and on-premise infrastructure. Most of the options available to distribute our policies are adequate for one cloud vendor. However, with a requirement to be multi-cloud we were interested in a technology that is readily available across a variety of implementations. Since we already use Docker compliant registry services to distribute our containers between multiple clouds, we found that sharing our policies the same way fit well into the infrastructure available across any of the environments in which we need to maintain a presence.

We found that a majority of the best practices we want to enforce are common across all of our critical microservices and that many of them are favorable to automation, such as:

- Enforce the use of only approved golden images in Dockerfiles

- Ensure that containers never run as a privileged user

- Ensure that all containers have network policies

- Ensure that the latest vendor updates are always installed in a container

- Ensure that all deployments go to a dedicated namespace with proper technical, business, and security labels

- Ensure that all production deployments have a minimum number of replicas

- Avoid using StatefulSets unless a use case is understood and acknowledged

- Ensure that all pods have readiness and liveliness probes

- Ensure that all pods set resource requirements and limits

- Ensure that all pods emit prometheus metrics

- Ensure pod disruption budgets are in place

- Block apps from using untracked persistent volumes that might contain state

Our implementation is built on policies written in Rego that check for each of these conditions. On every commit, conftest runs as part of the CI pipeline to test the infrastructure code. This gives developers quick feedback on their conformity to best practices and allows us to avoid some of the conversations we previously would end up having right as a feature was scheduled to deploy to production. Developers are now aware of the infrastructure requirements much sooner than before, and as a result, SRE and security spend much less time manually policing our deployment structures.

The key to making this effective is being able to easily update the policy code and distribute it to each CI pipeline that uses it. When we make a change to our best practices policies, we simply publish a new version of the policy bundle to a container registry. Development branches tracking the latest tag of the policy bundle will pick up the change on their next CI cycle and developers will be alerted right away to the change to standards during the CI testing step.

To accomplish this we use the following tools:

- Open Policy Agent (OPA) - An open source, general-purpose policy engine that enables unified, context-aware policy enforcement. OPA is embedded in numerous tools that enforce policy.

- https://www.openpolicyagent.org/

- Rego - The language used by OPA to write declarative, easily extensible policy decisions.

- https://www.openpolicyagent.org/docs/latest/policy-language/

- OPCR - An OCI compatible registry (like DockerHub) to store and share policies

- https://www.openpolicyregistry.io/

- Github Actions - For version control and CI/CD workflows

- https://github.com/features/actions

- Cosign - A tool to sign policy containers and verify their signatures

To make this concrete and actionable, without having to wade through Aserto-specific policy, the rest of this post defines some simple policies for Conftest and Gatekeeper, and demonstrates our end-to-end workflow.

For some examples of defined policies you can have a look at these repositories:

https://github.com/aserto-demo/policy-conftest

https://github.com/aserto-demo/policy-gatekeeper

And we have published a sample application with workflows that consume these policy checks here:

https://github.com/aserto-demo/kube-policy-demo-app

If you'd like to try this out yourself, you can fork each of these repositories and fill in your own credentials based on the requirements in the README files.

For example, Rego code to enforce our image policy looks like this:

https://github.com/aserto-demo/policy-conftest/blob/main/policy/images.rego

package main

denylist = ["python", "node", "ruby", "openjdk"]

deny[msg] {

input[i].Cmd == "from"

val := input[i].Value

contains(val[i], denylist[_])

msg = sprintf("unallowed image found %s", [val])

}If you're new to pushing policy to a registry, you can start off practicing using the Policy CLI to build and push our policy from our local machine. Practice in the policy-conftest repo directory.

Getting Started:

- Register for an OPCR account at https://www.openpolicyregistry.io/ - choose your organization name here

- Download and install the Policy CLI from the OPCR site

- Download cosign from https://github.com/sigstore/cosign

The Policy CLI follows the same conventions as Docker for building, pushing, and pulling policy. Execute these commands to log in to the policy registry:

export ORG_NAME=<My OPCR Org Name>

export GITHUB_PAT=<My GitHub Personal Access Token>

echo $GITHUB_PAT | policy login -u $ORG_NAME --password-stdin

Generate our public/private keypair for cosign (one time step):

cosign initializeBuild and push policy:

policy build . -t $ORG_NAME/policy-conftest:1.0.0

policy push $ORG_NAME/policy-conftest:1.0.0Sign Policy:

cosign sign --key cosign.key opcr.io/$ORG_NAME/policy-conftest:1.0.0Pull and save policy:

policy pull $ORG_NAME/policy-conftest:1.0.0

policy save $ORG_NAME/policy-conftest:1.0.0Check Policy Signature:

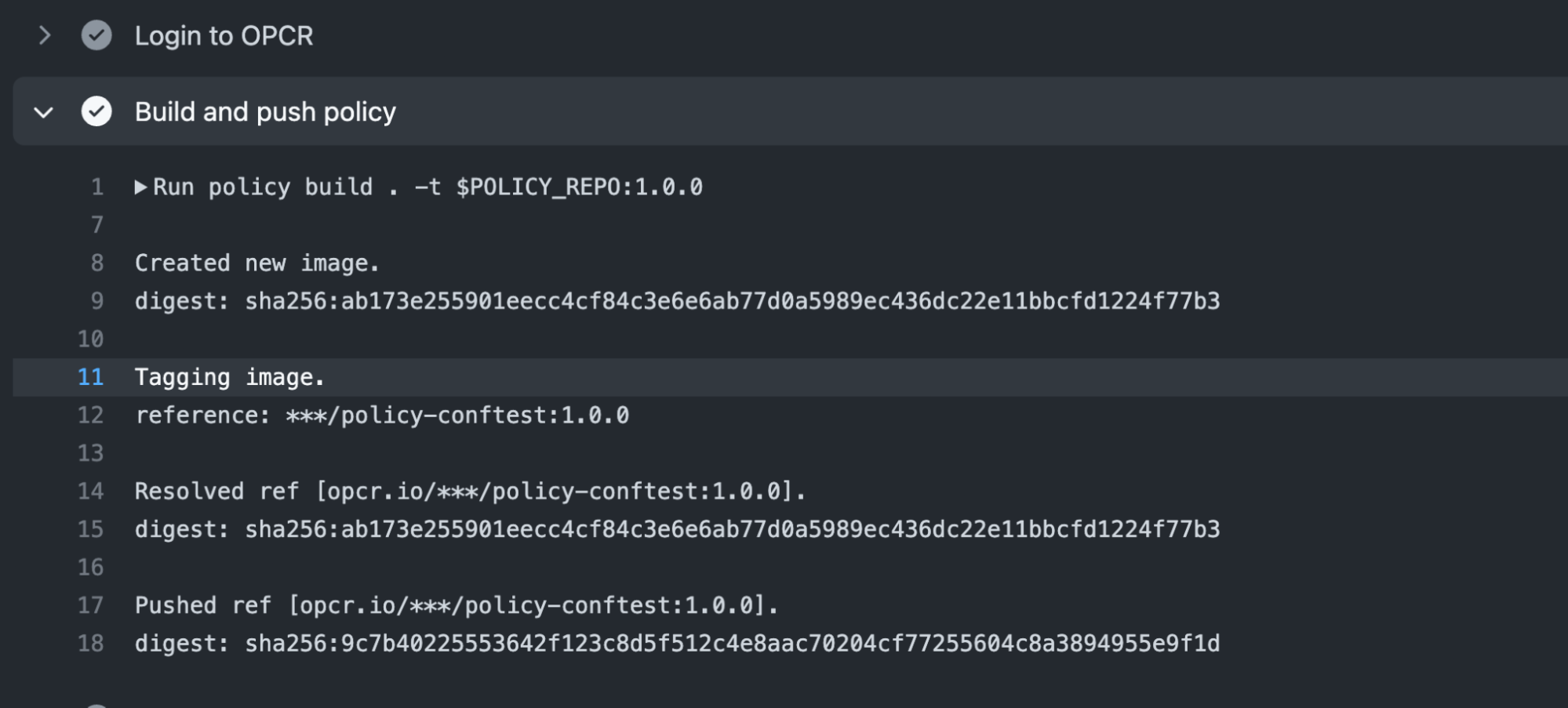

cosign verify --key cosign.pub opcr.io/$ORG_NAME/policy-conftest:1.0.0To demonstrate how we automate policy build and push we can look at some GithHub actions workflows. First, our security team uses a workflow that builds and publishes our policy to OPCR, then signs the policy bundle with their private key. We use the following code in both of our policy repos, found here and here.

- name: Login to OPCR

run: |

echo ${{ secrets.POLICY_PASSWORD }} | policy login -u ${{ secrets.POLICY_USERNAME }} --password-stdin

echo ${{ secrets.POLICY_PASSWORD }} | docker login opcr.io -u ${{ secrets.POLICY_USERNAME }} --password-stdin

- name: Build and push policy

run: |

policy build . -t $POLICY_REPO:1.0.0

policy push $POLICY_REPO:1.0.0

- name: Sign policy

run: |

echo "${{ secrets.COSIGN_PRIVATE_KEY }}" > cosign.key

cosign sign --key cosign.key opcr.io/$POLICY_REPO:1.0.0Now that this policy is published our development teams can consume it in their own workflows by pulling the published bundle and checking the signature against the security team's public key. Here's the code that does that for us:

- name: Login to OPCR

run: |

echo ${{ secrets.POLICY_PASSWORD }} | policy login -u ${{ secrets.POLICY_USERNAME }} --password-stdin

- name: Check policy signature

run: |

echo ${{ secrets.COSIGN_PUBLIC_KEY }} > cosign.pub

cosign verify --key cosign.pub opcr.io/$POLICY_REPO:1.0.0

- name: Pull policy

run: |

policy pull $POLICY_REPO:1.0.0

policy save $POLICY_REPO:1.0.0 -f - | tar -zxvf -

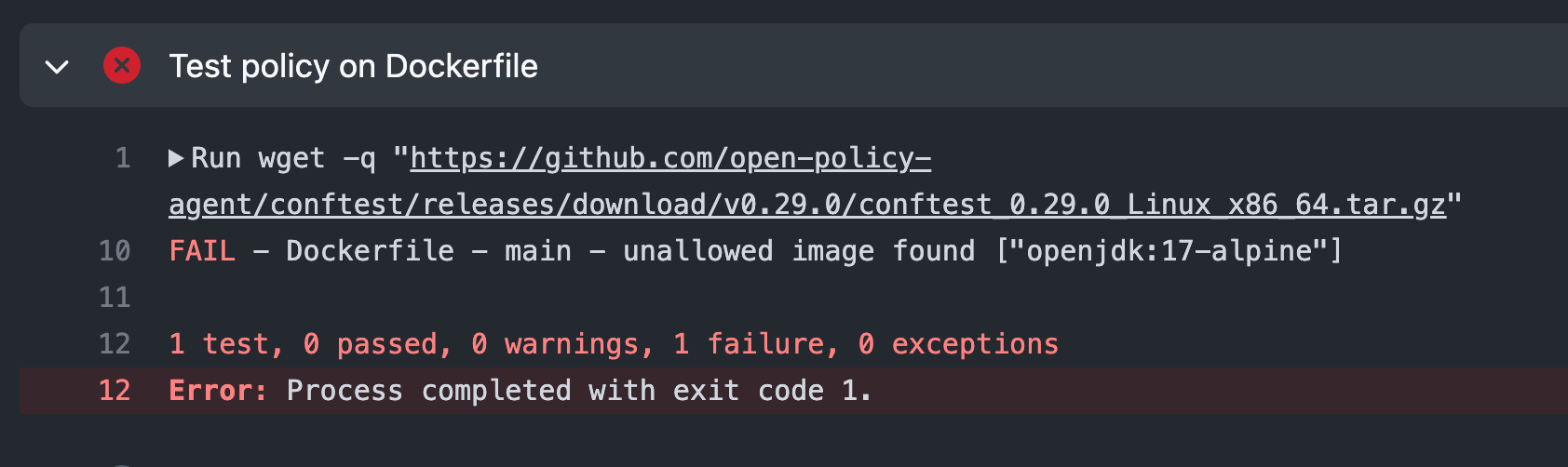

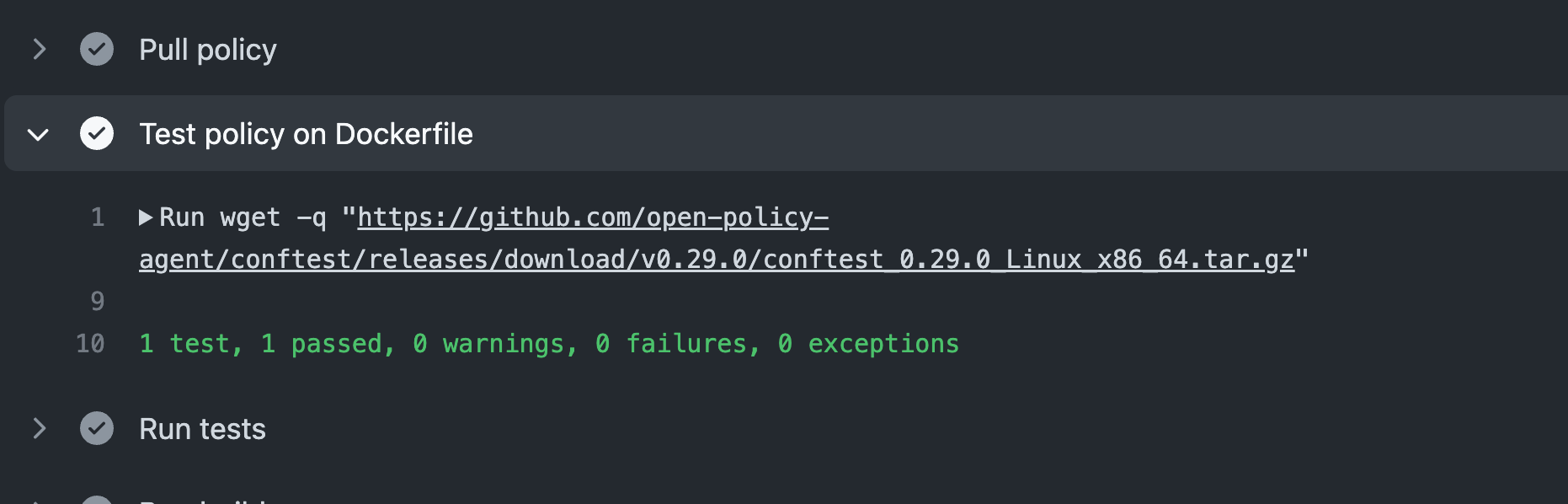

- name: Test policy on Dockerfile

run: |

conftest test DockerfileNow we can run through some tests to show our workflows in action. The first time we execute the CI workflow on kube-policy-demo-app, we get a failure that proves our policy was correctly enforced. The Dockerfile for this app is attempting to use the OpenJDK image, which is on our list of denied images.

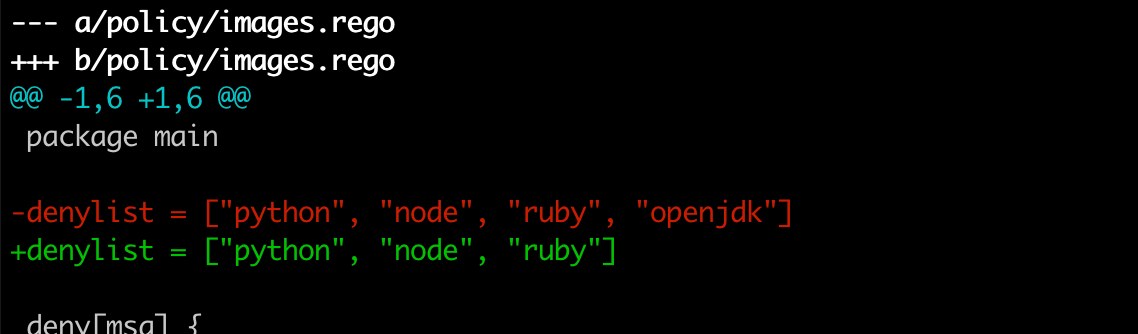

Let's change the policy and allow our developer to use OpenJDK. We do this by editing policy-conftest/policy/images.rego that we created earlier.

After we remove OpenJDK from the deny list, commit this change and observe that a new policy image is published by the action in the policy-conftest repo:

Now we can check to see if our new policy is enforced by rebuilding the application. This time the policy test succeeds since our OpenJDK image is no longer banned.

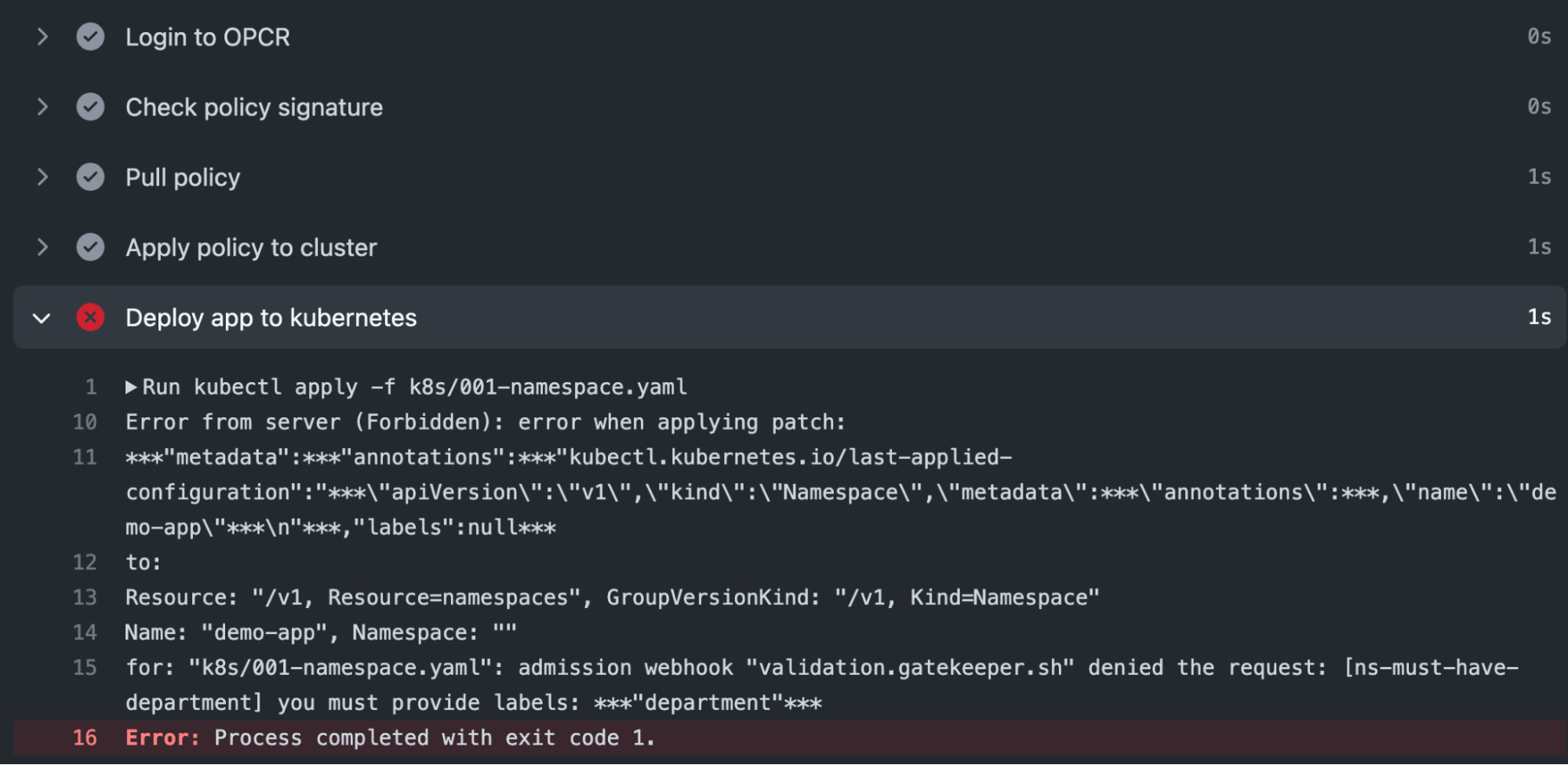

Our second example requires access to a Kubernetes cluster. This policy uses Gatekeeper which needs to be first installed on the cluster:

kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/release-3.8/deploy/gatekeeper.yamlWe set up a policy using Rego code to check for required labels:

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}(In our real infrastructure we have a separate workflow that applies policy to a cluster. For this example we're doing it along with our deployment here for simplicity.)

We can see when the CD workflow executes, our policy that requires all namespaces to have labels is enforced. We are not allowed to proceed with the deployment since our namespace lacks the required labels:

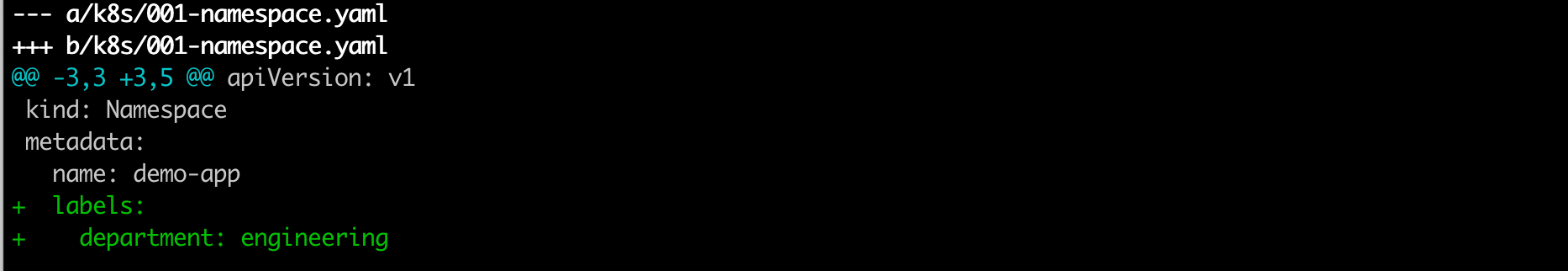

To remedy this our developer can modify the namespace definition to add a label:

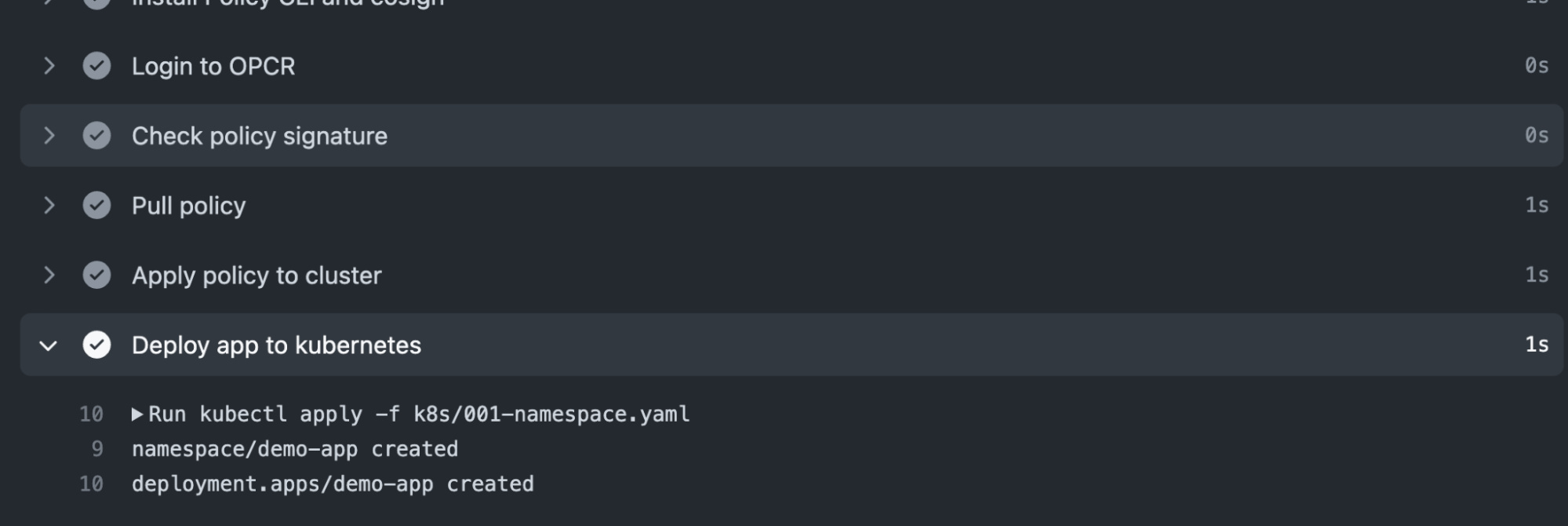

Since we've satisfied the policy requirements, our deployment workflow succeeds:

You can see how this comes together to create a DevOps-friendly workflow that grants all of the benefits of image sharing to your policies.

Andrew Poland

Senior Reliability Engineer

&color=rgb(100%2C100%2C100)&link=https%3A%2F%2Fgithub.com%2Faserto-dev%2Ftopaz)